LLMs don’t reward clicks, random backlinks, or one-off visibility spikes, so wire-distributed press releases and outdated SEO tactics barely influence how models interpret a brand. AI responds to clarity and favors brand signals that are consistent, structured, and repeated across the entire footprint. That means:

- If the brand narrative is fragmented, LLMs merge it with others.

- If the positioning is weak, they ignore it entirely.

LLM-driven discovery is becoming the primary layer of how people learn about companies, evaluate expertise, and form opinions long before they ever land on a website. The brands that win are the ones that optimize for influence – the ability to be cited, mentioned, and used as a reference in the answers AI gives to millions of users.

And here lies the opportunity: PR finally operates in an environment built entirely around what it has always been responsible for – coherent narrative, clear messaging, and brand awareness within certain categories. The difference is that now these signals are shaping how models understand these categories themselves.

This is the foundation of PR for LLM visibility – a strategic approach that turns positioning into the primary lever of AI visibility and transforms brand language into the language that LLMs repeat, cite, and treat as the default explanation.

What PR for LLM visibility actually means

LLM visibility is no longer about traffic – unlike search engines, which are technically designed to send users to external websites, AI models are programmed to deliver complete, self-contained answers inside their own interface. They generate presence: your brand becomes part of the answer itself. This is pure brand awareness, the original function of PR. But in the AI era, awareness forms through a consistent knowledge footprint – one that models can parse, summarize, and reuse.

PR for LLM visibility is the discipline of engineering this effect deliberately – through positioning, structured content, and distribution aligned with how models ingest information. It’s making your expertise legible for a system that rewards clarity and punishes inconsistency.

And this is where topical authority becomes the new battleground: LLMs treat the most structured interpretation of a category as the default truth. If your brand provides that interpretation, the model elevates you automatically.

How LLM visibility works

To understand why LLM mentions convert into branded intent, we need to look at how models assemble answers in the first place.

They do so by pulling patterns from the sources they trust most – structured explainers, authoritative comparisons, educational content, and consistent category narratives. To influence those patterns, you need to create the kind of signals models actually pick up.

Here’s the practical sequence:

- You create AI-friendly content that models can summarize cleanly.

- You place or pitch this content where LLMs look for information – authoritative media, reputable aggregators, expert SEO hubs, and neutral third-party platforms. This is the core of LLM seeding – a strategy aimed at being present within the sources that AI trusts, not just being the source of information for it.

- When users ask questions related to your domain, the model synthesizes its answer and includes your brand as a reference point inside the explanation.

- Most responses don’t include links, but the mention stays.

- Users notice and remember your name, often without realizing it.

- Over time, these mentions compound into branded search, direct navigation, and most importantly, trust – because the model framed your brand as part of the category.

This effect is subtle but extremely powerful. It relies on the model’s ability to internalize your interpretation of the category – and repeat it thousands of times in the future. In the AI era, the brands that LLMs treat as “part of the explanation” become the ones users stick to by default.

The two core branches of LLM optimization: AI mentions and AI citations

LLM visibility grows along two parallel vectors – AI mentions and AI citations. They may look similar on the surface, but in reality they represent two very different layers of influence: be the source or be in the source.

AI mentions: Awareness inside the answer

AI mentions occur when a model includes your brand name in its response to a category-level or intent-based query. It’s a new form of awareness:

- the model explains a topic

- uses your brand as a natural example

- the reader internalizes it as part of the category

- later they search for your brand directly

In LLM-driven discovery, a mention = memory.

AI citations: Structured knowledge models reuse

AI citations go deeper. This is when a model might not name the brand in its answer but:

- relies on your content

- reuses your definitions

- draws from your tables, frameworks, or explanations

- incorporates your data as part of its reasoning

Unlike mentions, citations behave like a new form of referral distribution. A single well-structured piece of content can appear across thousands of model outputs because the LLM borrows fragments of it as an efficient way to explain the topic.

Citations = repeated use = domain influence.

This is what turns LLM visibility into a long-term asset: you don’t just appear in responses – you become part of how the model explains the category itself.

Of course, shaping your content into a more LLM-friendly form doesn’t automatically guarantee more user acquisition. However, people that come to you from AI platforms, definitely have clearer intent and higher engagement, making LLMs a source of high-quality traffic. That’s why it is important to mind the AI citations aspect as well.

How to establish topical authority in every category you operate in

Topical authority isn’t just built through volume. Even though massive content distribution helps to optimize presence for various markets, prompts, and niches, authority is also built through clarity, depth, and consistency. For LLMs, the most authoritative brand is the one that provides the most structured and coherent interpretation of the category. Your goal is to become the source models rely on to explain the topic, not just one of many voices competing for attention.

Here’s how that happens in practice:

1. Build a comprehensive content strategy around high-intent keywords

Topical authority starts with understanding what people actually want to know in your category.

These queries form the backbone of your content strategy – not just SEO keywords, but intent-driven questions where the model must deliver a clear explanation.

Basically, all the lessons learnt about SEO before can also work with LLM optimization. It’s just on the top of that the competitive mechanics shift at both ends of the keyword spectrum. For high-volume terms, ranking first on your own domain is not enough, you need repeated coverage on trusted external platforms so models see your brand as the common answer across different sources. For long-tail, AIO actually opens more room to grow, because LLMs respond to very specific, conversational queries, so highly focused, niche content that you would never write just for SEO becomes valuable.

LLMs prioritize content that genuinely helps users understand and evaluate a topic. That means your materials must go significantly deeper than the average page ranking in traditional search.

To support this process, you need a reliable way to identify high-intent keywords. There are two practical approaches:

- Manual discovery: reviewing search behavior patterns, monitoring “People also search for” queries, and tracking emerging phrasing in community discussions and industry forums.

- Using AI-driven analysis tools that surface the prompts, questions, and intent clusters models already associate with your category.

Combining both methods gives a clear map of the terms users rely on and the language LLMs expect, which makes your topical authority work far more precisely.

2. Create structured, model-friendly educational content

Models extract signals from structure. Content formats that consistently outperform include:

- comparison tables

- step-by-step frameworks

- category explainers

- FAQs

- definitions and terminology maps

These formats make your interpretation easy for LLMs to absorb and reuse – which is exactly what builds citations later.

To better understand how LLM-friendly content works, it helps to look at the three layers of optimization that shape how models interact with information:

- AEO (Answer Engine Optimization) focuses on structuring content so models can extract direct answers instantly. Clear definitions, front-loaded explanations, and unambiguous phrasing help LLMs interpret the material as a reliable response to specific questions.

- GEO (Generative Engine Optimization) formats content for citations. Models pull structured data, comparisons, fact-based statements, and clean semantic patterns when generating synthesized answers. GEO ensures these elements are easy for an LLM to reuse.

- AIO (AI Interaction Optimization) optimizes the user’s experience after the model surfaces the brand. This includes logical navigation, clarity, and high-signal content density, which together increase the likelihood that users who arrive from AI answers engage deeper with the material.

Understanding these fundamentals shapes how AI-friendly content should be like. We at Outset PR apply this stack across educational materials, research outputs, and category explanations so that both users and AI systems interpret your brand as a coherent and authoritative source.

3. Introduce clarity where the category is noisy

The fastest path to authority is becoming the brand that explains what others only reference. If the category is poorly defined, your definitions become the default. If competitors contradict each other, your framework becomes the anchor. LLMs reward the brand that organizes the knowledge space.

4. Make your positioning impossible to ignore

Your category language must reflect your differentiation. This is where positioning directly intersects with LLM visibility: the more coherent and repeated your message is across channels, the easier it is for models to associate your brand with the topic.

5. Publish consistently across the places LLMs monitor

Topical authority is not domain-bound. Models gather knowledge from:

- authoritative media

- aggregators

- expert communities

- review platforms

- educational hubs

Visibility in AI depends on leaving a repeatable pattern across all these surfaces. When your content becomes the deepest and clearest interpretation of the category, both people – and increasingly, LLMs – start treating your brand as the default source. That’s when topical authority begins converting directly into influence.

Self-case: How Outset PR created topical authority in the data-driven crypto PR category

At the early stage, our digital footprint wasn’t aligned around a unified message. LLMs frequently confused us with unrelated companies sharing the “Outset” naming pattern. Models blended descriptions from all these brands, simply because our own positioning ideas weren’t yet strong enough.

We solved this by refining and synchronizing every outward-facing channel: our website, social media profiles, business aggregators, review platforms, and long-form descriptions. All of them were rewritten to consistently reflect a single idea: data-driven crypto PR with a human touch.

This alignment gave LLMs a clear, unambiguous set of signals about who we are – and, more importantly, it created a definable category for models to latch onto.

Creating a new niche instead of competing inside a crowded one

The broader “crypto PR” space was saturated with established players. Competing head-to-head for visibility – whether in traditional search or inside AI-generated answers – would have been expensive, slow, and strategically pointless.

Instead, we decided to build our own niche: data-driven crypto PR, a category no one had claimed. By defining its boundaries, explaining its mechanics, and consistently reinforcing it across channels, we became the default reference for the concept.

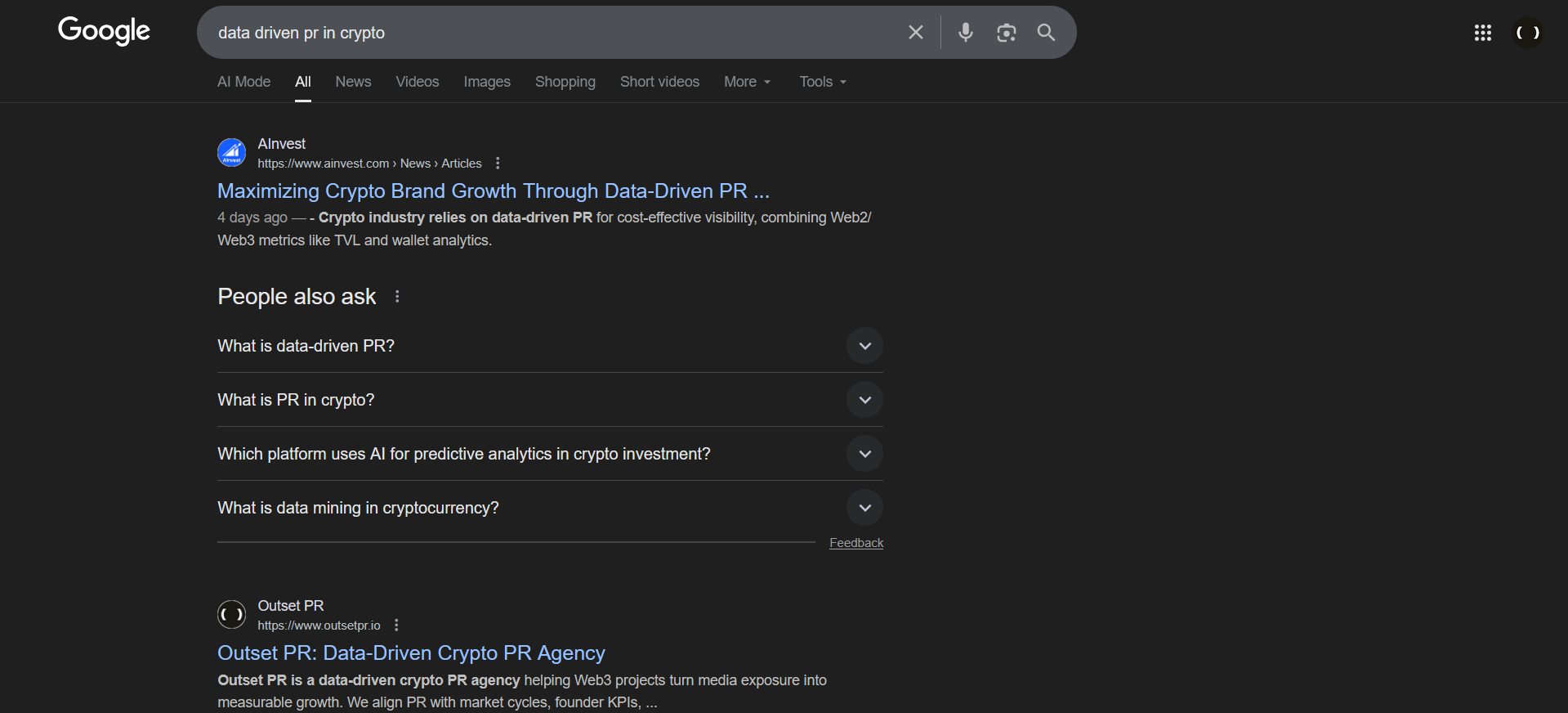

For instance, users will see us at the #2 on Google when searching for “data driven pr in crypto”:

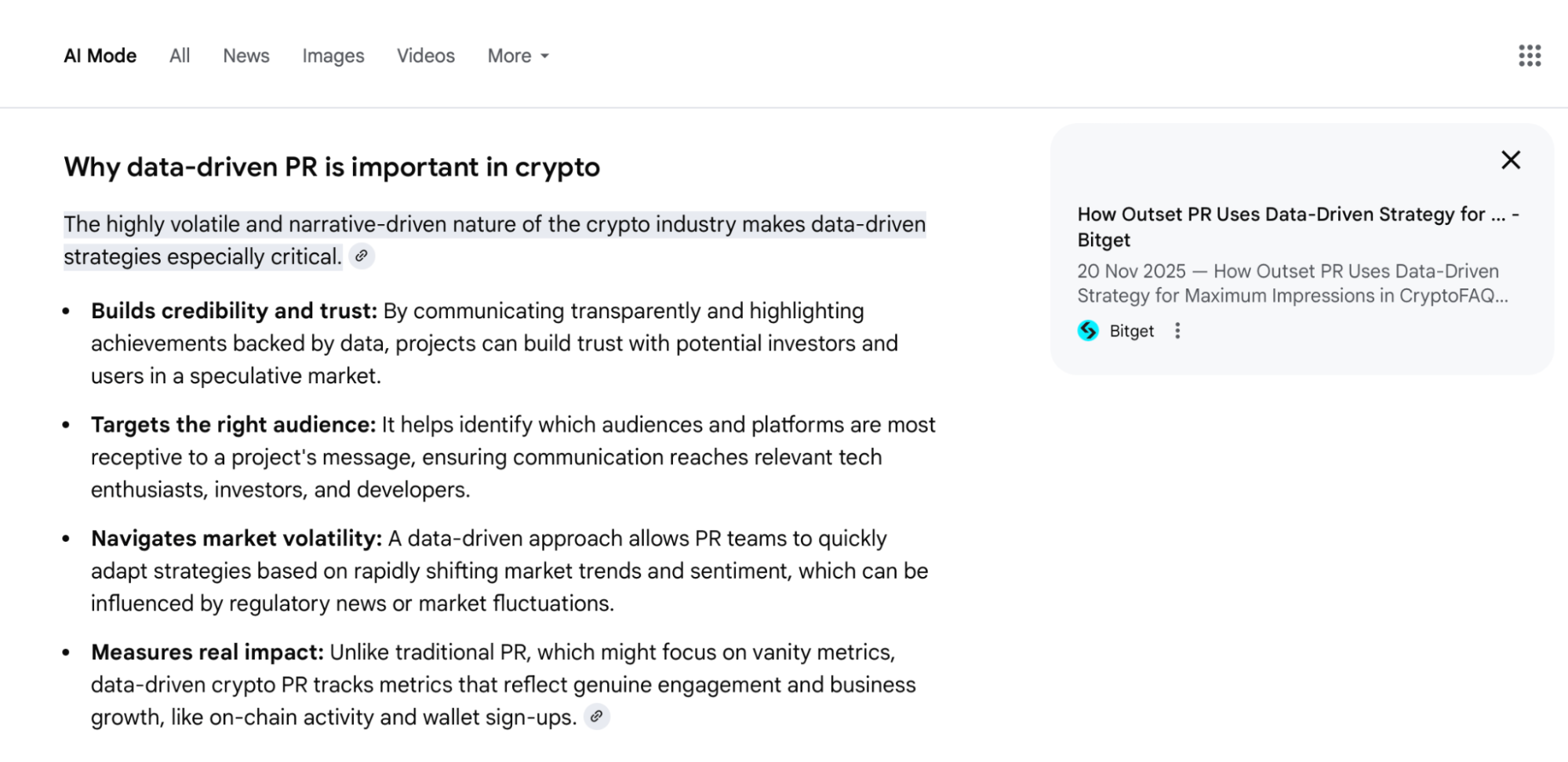

Soon after, competitors began adopting similar language – but because our definitions were the first structured interpretation in the market, LLMs anchored their understanding of the category to our frameworks. Early clarity created structural advantage. In this particular case, it’s about appearing as the main source in the Gemini answer:

Building topical authority through LLM seeding

With a defined category in place, we moved to scale its visibility using a targeted LLM seeding strategy. Leveraging internal analytics and our proprietary syndication map, we identified the sources LLMs rely on most when generating answers about communications, strategy, and crypto PR.

We prioritized three types of content that models treat as high-value signals:

- Problem-solving how-to content

We developed educational materials explaining how founders can solve communication challenges more efficiently with a data-driven PR approach. These weren’t promotional pieces – they were structured explainers introducing our frameworks organically and repeatedly.

- Top and best lists

LLMs frequently reuse these when summarizing categories. We collaborated with journalists, providing positioning-aligned agency blurbs that fit naturally into authoritative third-party roundups.

- Original research and proprietary data

Our Outset Data Pulse analytics provided exclusive insights unavailable anywhere else. We pitched these findings to journalists, enabling them to build stories around our datasets. LLMs strongly favor originality and unique stories, which significantly increased the likelihood that our definitions and insights would be cited inside AI-generated answers.

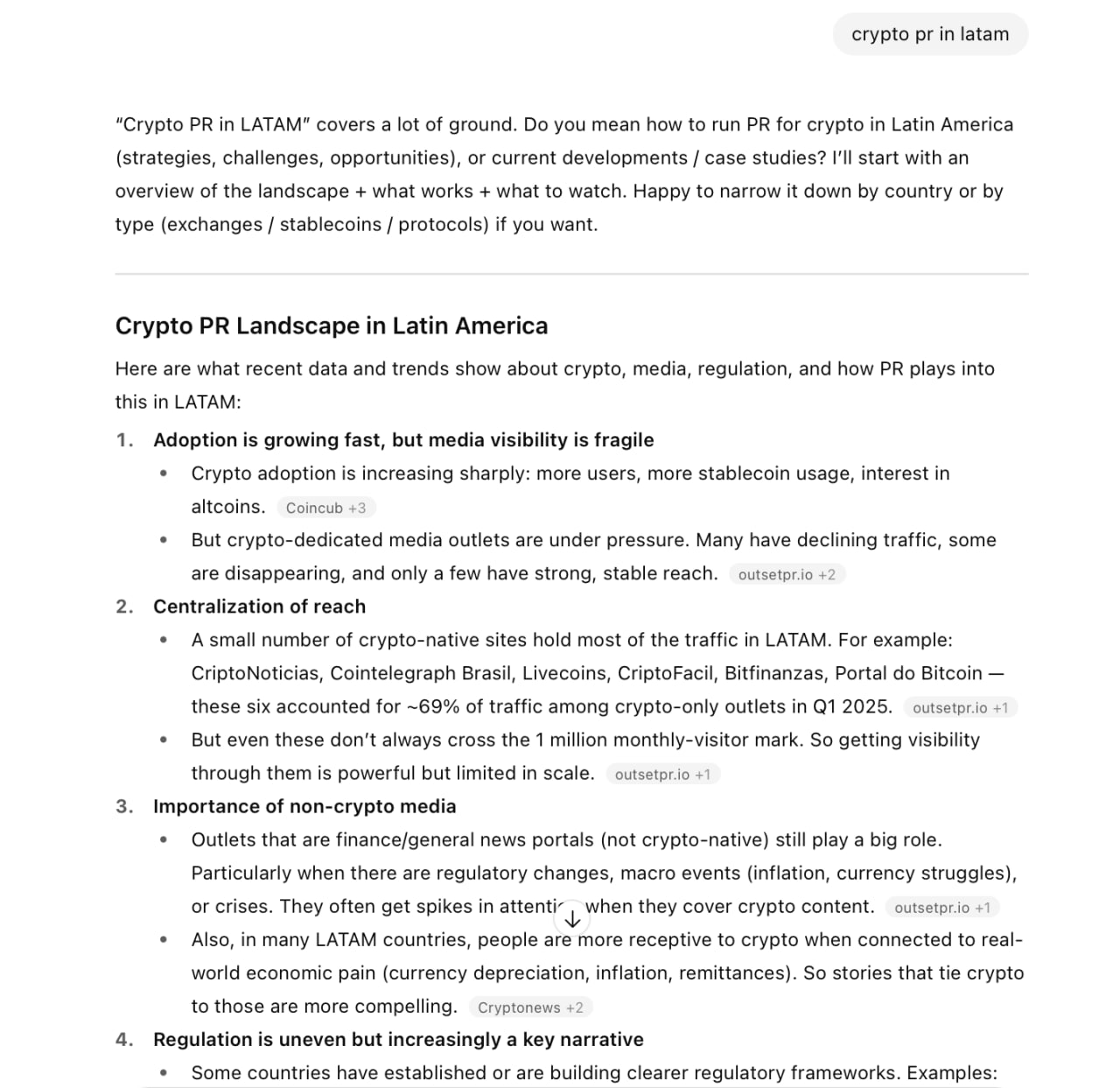

Ask ChatGPT to tell you more about crypto PR in LATAM, and most of the sources will be from Outset PR:

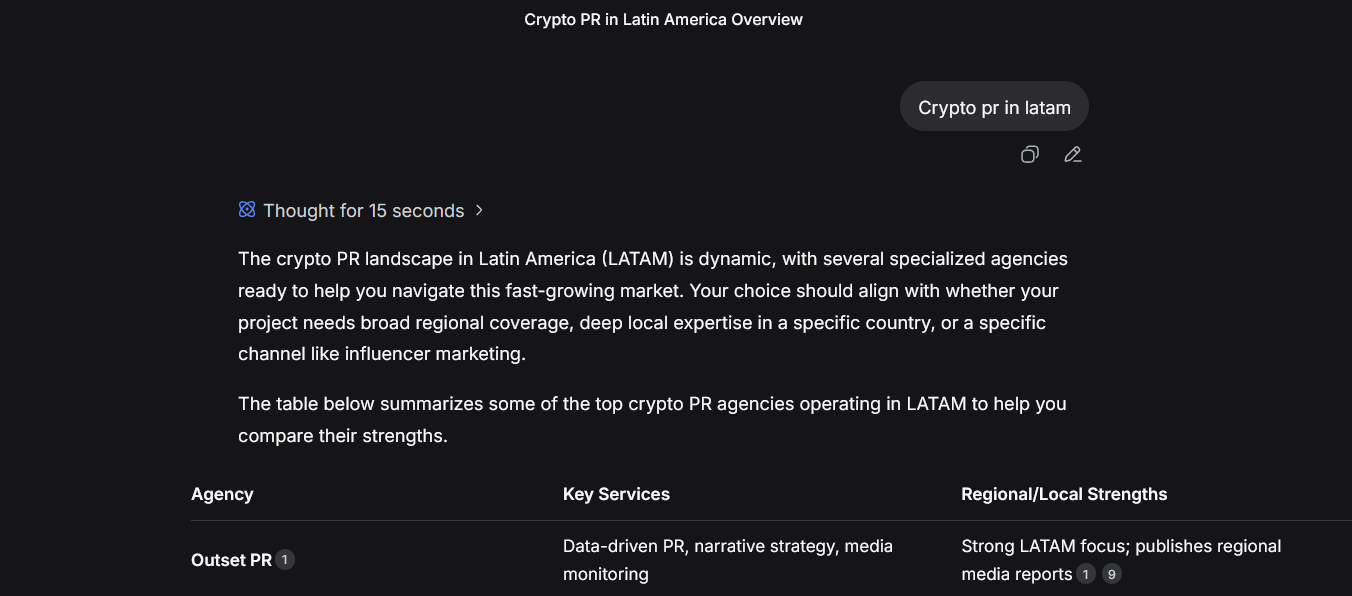

Move to DeepSeek, and our agency will be at the top position in the recommendation table:

This combination – a clearly defined category, a consistent narrative, and structured placement across high-authority surfaces – allowed LLMs to form a stable interpretation of “data-driven crypto PR.” Once that happened, the ecosystem followed: media and aggregators began repeating the same ideas. Our category language became the category default – and that’s when LLM visibility started compounding.

Early results and measurable impact

Within just seven months, Outset PR became a recognizable player in the industry, with a clearly defined niche in data-driven communications. The category we introduced solidified around the definitions and frameworks we placed across authoritative surfaces. As a result, our visibility inside industry conversations and AI-generated answers began to scale.

According to 2025 public rankings, expert roundups, review platforms, and AI summaries, our estimated share of voice in the “best crypto PR agencies” conversation grew roughly 2–3x, rising from around 2–4% in May 2025 to 8–12% by November 2025.

These numbers are directional, not final. Our analytics team plans to study the dynamics in more depth for one simple reason: LLM-driven visibility behaves differently from traditional search.

AI answers are inherently volatile

Multiple studies show that 40–60% of the sources cited by LLMs change every month. Models are non-deterministic – they can generate different answers to the same prompt on the same day. This volatility doesn’t diminish the impact of visibility; it makes clear why consistent category leadership matters more than one-time peaks. LLM visibility isn’t “won” once – it is maintained through ongoing clarity, repetition, and fresh signals.

The next phase: expanding visibility beyond a few models

So far, our work has focused on:

- AI Mode;

- ChatGPT;

- and optimized mentions across our own channels and media coverage.

But the LLM ecosystem is much broader: search-integrated models, content aggregators, review-based engines, and emerging AI-native platforms. Scaling into this wider environment is the next phase of our strategy – a framework that some industry players call Search Everywhere Optimization. In an AI-first world, visibility is distributed across every surface a model might learn from.

What the new visibility model teaches us

LLM-driven discovery rewards brands that define their categories with clarity and depth. Models rely on consistent signals across the entire footprint, so the brands that articulate their message in one coherent way and support it with structured, educational content become default references.

Visibility now depends on presence everywhere models gather information. LLMs pull from websites, media, social platforms, community discussions, and review hubs, which means authority comes from massive distribution. This is the foundation of Search Everywhere Optimization, an approach that treats every channel as a potential training source.

Maintaining visibility requires ongoing content updates, rapid iteration, and continuous monitoring of how models mention and position the brand. The outcome is simple: mentions shape memory, and memory drives direct search. Brands that control the language of their category determine how LLMs explain it, and in an AI-first discovery environment, this becomes the most durable form of influence.

As we continue to experiment, measure, and refine how brands gain visibility in an AI-first discovery ecosystem, we’ll keep sharing our findings with the industry. The space is changing fast, and the more openly we exchange knowledge, the stronger the collective playbook becomes.